Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- SS&C Blue Prism Community

- Get Help

- Product Forum

- Out Of Memory Exception

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Out Of Memory Exception

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

27-10-20 01:04 PM

Hi,

I am getting out of memory exception while trying to retrieve data from excel using OLEDB object. Excel has more than 2 lakhs plus rows and 26 columns. Could you please suggest the best approach to avoid this exception or please suggest the alternate approaches to read data from huge volume excels.

Thanks IN advance.

------------------------------

Vishnu Deepthi Chintala

Technology Analyst

Infosys

UTC

------------------------------

I am getting out of memory exception while trying to retrieve data from excel using OLEDB object. Excel has more than 2 lakhs plus rows and 26 columns. Could you please suggest the best approach to avoid this exception or please suggest the alternate approaches to read data from huge volume excels.

Thanks IN advance.

------------------------------

Vishnu Deepthi Chintala

Technology Analyst

Infosys

UTC

------------------------------

6 REPLIES 6

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

27-10-20 02:13 PM

I would look into limiting the number of rows you're retrieving at one time. You could use a range when you specify the sheet in the OLEDB query -- I believe it's something like

------------------------------

Nicholas Zejdlik

RPA Developer

------------------------------

Select * From Sheet1$A1:Z500. You could then process that particular chunk, and then increment the rows numbers to A501:Z1000 and so on.------------------------------

Nicholas Zejdlik

RPA Developer

------------------------------

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

27-10-20 05:24 PM

Hi Vishnu,

Are you able to open the excel manually without getting the excel hanged?

If yes, then use Excel VBO only to modify/transform the data since the excel VBO behaves exactly same as tasks being performed manually. If the excel is getting hanged and you are not able to fetch the data then you can opt for OLEDB approach.

------------------------------

Ritansh Jatwani

Consultant

EY

Asia/Kolkata

------------------------------

Are you able to open the excel manually without getting the excel hanged?

If yes, then use Excel VBO only to modify/transform the data since the excel VBO behaves exactly same as tasks being performed manually. If the excel is getting hanged and you are not able to fetch the data then you can opt for OLEDB approach.

------------------------------

Ritansh Jatwani

Consultant

EY

Asia/Kolkata

------------------------------

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

27-10-20 11:35 PM

With 200k rows and 26 columns you most likely won't be able to get that data into blueprism collection all at once. And even if you could, most actions involving that collection will get OOM exception either way. So that leaves a question what are you going to do with that data? Do you really need it all at once? Consider processing it in batches if you can.

------------------------------

Andrey Kudinov

Project Manager

MobileTelesystems PJSC

Europe/Moscow

------------------------------

------------------------------

Andrey Kudinov

Project Manager

MobileTelesystems PJSC

Europe/Moscow

------------------------------

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

29-10-20 04:44 AM

As Andrey Says,

Based on the amount of rows and columns, handle it with collections will result in Out of Memory exception.

Some alternatives could be:

If you want to make a file, log or something like that:

- You could modify the OLEDB VBO in order that instead of allocating the rows inside a collection, writhe them inside a file,

If you need to load it into Work queue

- You could try the approach of using LIMIT inside you QUERY, to get a few rows, load them in the WQ, then iterate thouhg the registers using SKIP inside you QUERY

- Traverse the excel file using MS Excel VBO and navigate thorugh the file, by certain amount of rows.

If you have more doubts, feel free to contact me, and i could help you.

------------------------------

Miguel Carrillo

------------------------------

Based on the amount of rows and columns, handle it with collections will result in Out of Memory exception.

Some alternatives could be:

If you want to make a file, log or something like that:

- You could modify the OLEDB VBO in order that instead of allocating the rows inside a collection, writhe them inside a file,

If you need to load it into Work queue

- You could try the approach of using LIMIT inside you QUERY, to get a few rows, load them in the WQ, then iterate thouhg the registers using SKIP inside you QUERY

- Traverse the excel file using MS Excel VBO and navigate thorugh the file, by certain amount of rows.

If you have more doubts, feel free to contact me, and i could help you.

------------------------------

Miguel Carrillo

------------------------------

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

29-11-21 05:05 PM

Hi,

I am having the similar kind of issue.

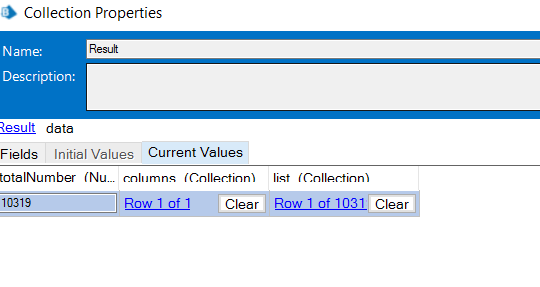

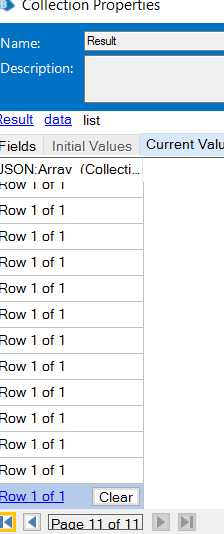

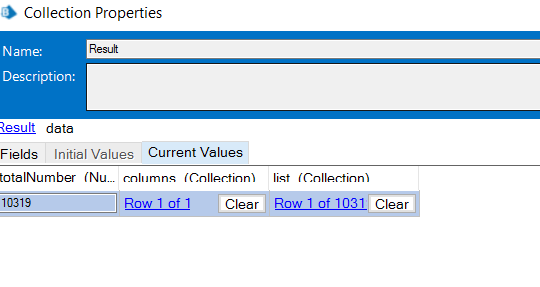

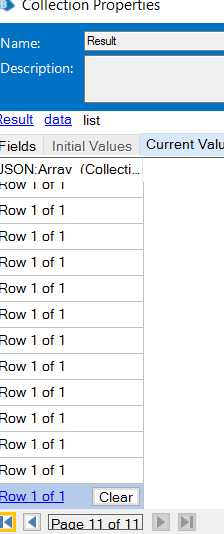

Getting the API response in collection. Collection has nested collection(Data<list<JSON:Array). something like below screenshot.

JSON:Array will be having more than 20k records.

Please help me on that.

------------------------------

Bhawana Daultani

------------------------------

I am having the similar kind of issue.

Getting the API response in collection. Collection has nested collection(Data<list<JSON:Array). something like below screenshot.

JSON:Array will be having more than 20k records.

Please help me on that.

------------------------------

Bhawana Daultani

------------------------------

Options

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

30-11-21 07:35 AM

@BhawanaDaultani,

If you can process records separately, then you can probably write some code to return individual records and work with it, although likely painfully slow

If you need to work with a whole dataset at once for some reason, e.g. you need to pull some stats from it, then try doing whole thing in a code stage to avoid blueprism collections overhead or use external tools.

It doesn't look like we are getting Blueprism with 64 bit core before Half life 3 🙂

------------------------------

Andrey Kudinov

Project Manager

MobileTelesystems PJSC

Europe/Moscow

------------------------------

If you can process records separately, then you can probably write some code to return individual records and work with it, although likely painfully slow

If you need to work with a whole dataset at once for some reason, e.g. you need to pull some stats from it, then try doing whole thing in a code stage to avoid blueprism collections overhead or use external tools.

It doesn't look like we are getting Blueprism with 64 bit core before Half life 3 🙂

------------------------------

Andrey Kudinov

Project Manager

MobileTelesystems PJSC

Europe/Moscow

------------------------------

Related Content

- Open workbook - The message filter indicated that the application is busy error in Product Forum

- Unable to identify the process owning the foreground window. in Product Forum

- Decipher IDP apply exception reason at class verify in Product Forum

- Decipher VBO - add new action to update Batch Status in Product Forum

- MS Excel VBO - Exception has been thrown by the target of an invocation in Product Forum