- SS&C Blue Prism Community

- Get Help

- Product Forum

- RE: Looking for Efficiencies - Remove Duplicates f...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Looking for Efficiencies - Remove Duplicates from Collection

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

11-07-22 01:03 PM

Hello all.

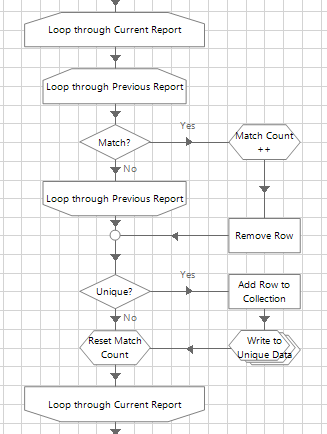

I've been tasked with tracking differences between two different Excel files for reporting purposes. My first thought was to gather them as collections through the Excel VBO and then Remove Duplicates via Collection Manipulation, but this had a poor success rate. So my second thought was to create a nested loop of the two collections (that contain the data from the spreadsheets) and do a comparison on the values of both (there are 20 columns per row to check against). If there was a match, remove the row and go to the next entry. If there was no match, write those values to a new collection - this would be my 'unique data'. Here's the flow, in case I haven't described that very well:

Now this works perfectly well in terms of accuracy. However, at the moment, it is only seemingly feasible for fairly small spreadsheets due to the time taken to execute. Admittedly, this is taken from stepping over in Debug mode, but for two collections with 117 rows, the time taken is about a minute. For 278 rows, it takes about 6 minutes, whereas for 400 rows it took about 15 minutes. I've just given up on waiting for it to do a collection with 827 rows after about an hour. The biggest collection it will ultimately need to parse through is about 1,800 rows. This obviously is not feasible with this seemingly exponential growth in processing power/time required.

Are there any existing code stages that might make this more manageable whilst maintaining accuracy? The other thought that occurred to me is to split the collections into batches of 100 rows or so, collect the unique data from those and then re-process these 'unique data' collections to get down to a final data set, although this feels very inefficient as well.

Open to all suggestions!

------------------------------

John Hammond

------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

11-07-22 02:07 PM

If you have all the information in Excel files, you can use the Oledb connector to query both Excel file. (This one: https://digitalexchange.blueprism.com/dx/entry/3439/solution/data---oledb )

With this you can use SQL statements to select your information and use DISTINCT statement to delete duplicate rows (here you have more info about the DISTINCT scaler.com/topics/distinct-in-sql/)

The statement looks like this:

"SELECT distinct * FROM [Sheetname$]"

Hope this helps you!

See you in the community, bye 🙂

------------------------------

Pablo Sarabia

Solution Manager & Architect

Altamira Assets Management

Madrid

------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

11-07-22 02:11 PM

UniqueRowsCollection = DuplicatesCollection.DefaultView.ToTable(true, "ColA", "ColB", "ColC")------------------------------

John Carter

Professional Services

Blue Prism

------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

11-07-22 03:26 PM

Having just had a little play with it, I configured 'Set Connection' just with the filepath to the Excel document in the Database field as mentioned above before opening a connection - both of these were stepped over succesfully, but then when I tried to execute after this, I was presented with the following:

Internal : Could not execute code stage because exception thrown by code stage: ExecuteNonQuery requires an open and available Connection. The connection's current state is closed.

EDIT: OK, ignore the above! I managed to get the connection established with a Connection string, although the 'distinct' SQL command seems very hit and miss, so I wonder if I'm doing anything incorrectly. I've merged the two spreadsheets together into a single one, saved it, and have the OLEDB connection pointing at this input file. The two files originally were exact copies of each other, each had one field renamed to 'THIS IS A TEST' and 'THIS IS ANOTHER TEST' accordingly, so I would expect all other rows (which have an exact match) to be removed. When I execute the command however, what was a file with ~530 lines gets reduced down to a file with 499 lines?

------------------------------

John Hammond

------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Email to a Friend

- Report Inappropriate Content

12-07-22 05:11 AM

Can you try below code in Code stage:

Collection_Out=Collection_In.DefaultView.ToTable(True)

Input : Collection_In

Output: Collection_Out

Language : Visual Basic

No additional namespaces required

------------------------------

Joshna Dammala

RPA Developer

Asia/Kolkata

------------------------------

- Remove duplicates from collection but keep latest row? in Product Forum

- Remove Duplicate In Collection in Product Forum

- Remove duplicate rows based on 3 column names in Product Forum

- Looking for Efficiencies - Remove Duplicates from Collection in Product Forum

- Removing Duplication from a collection but keeping related reference in Product Forum